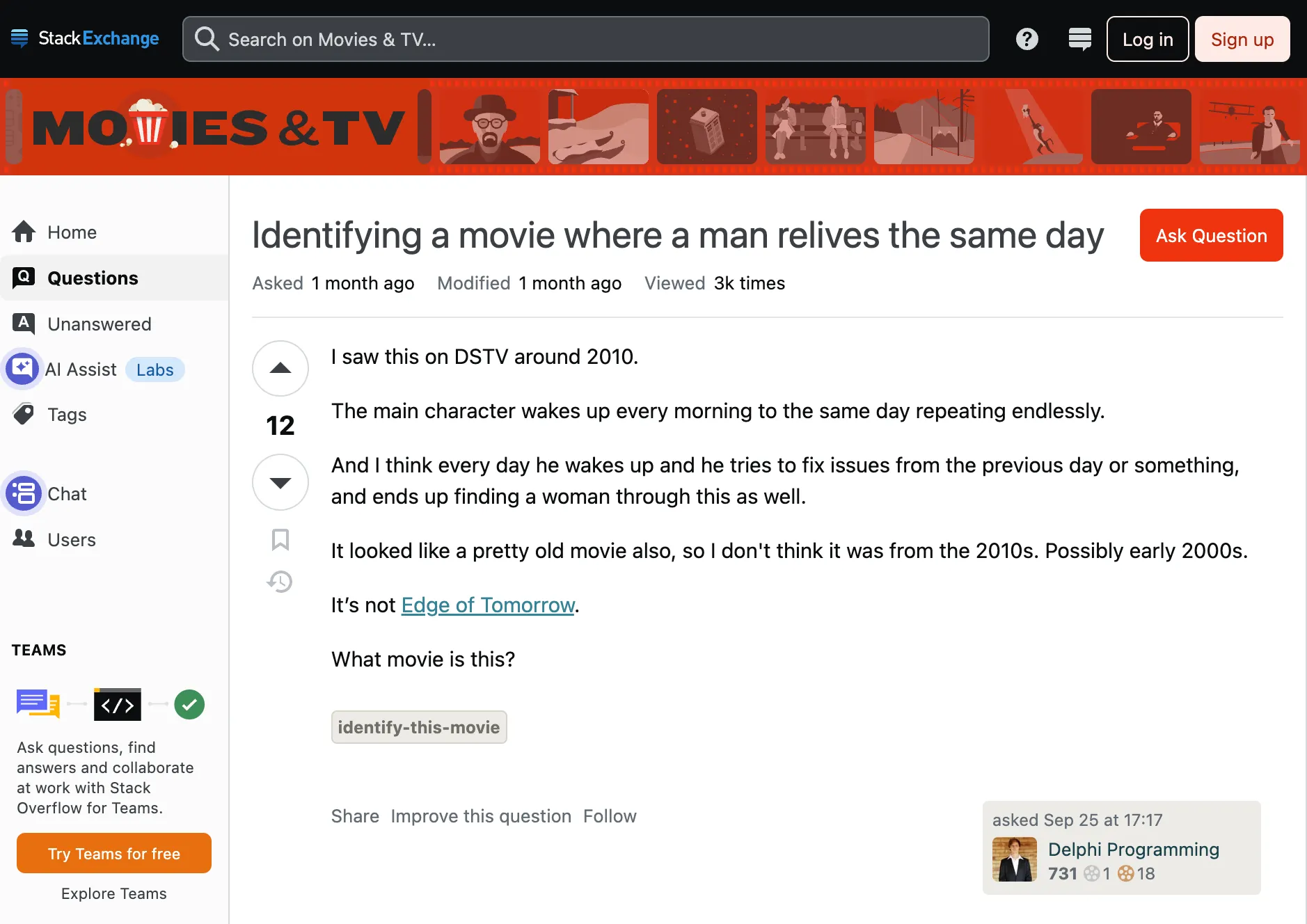

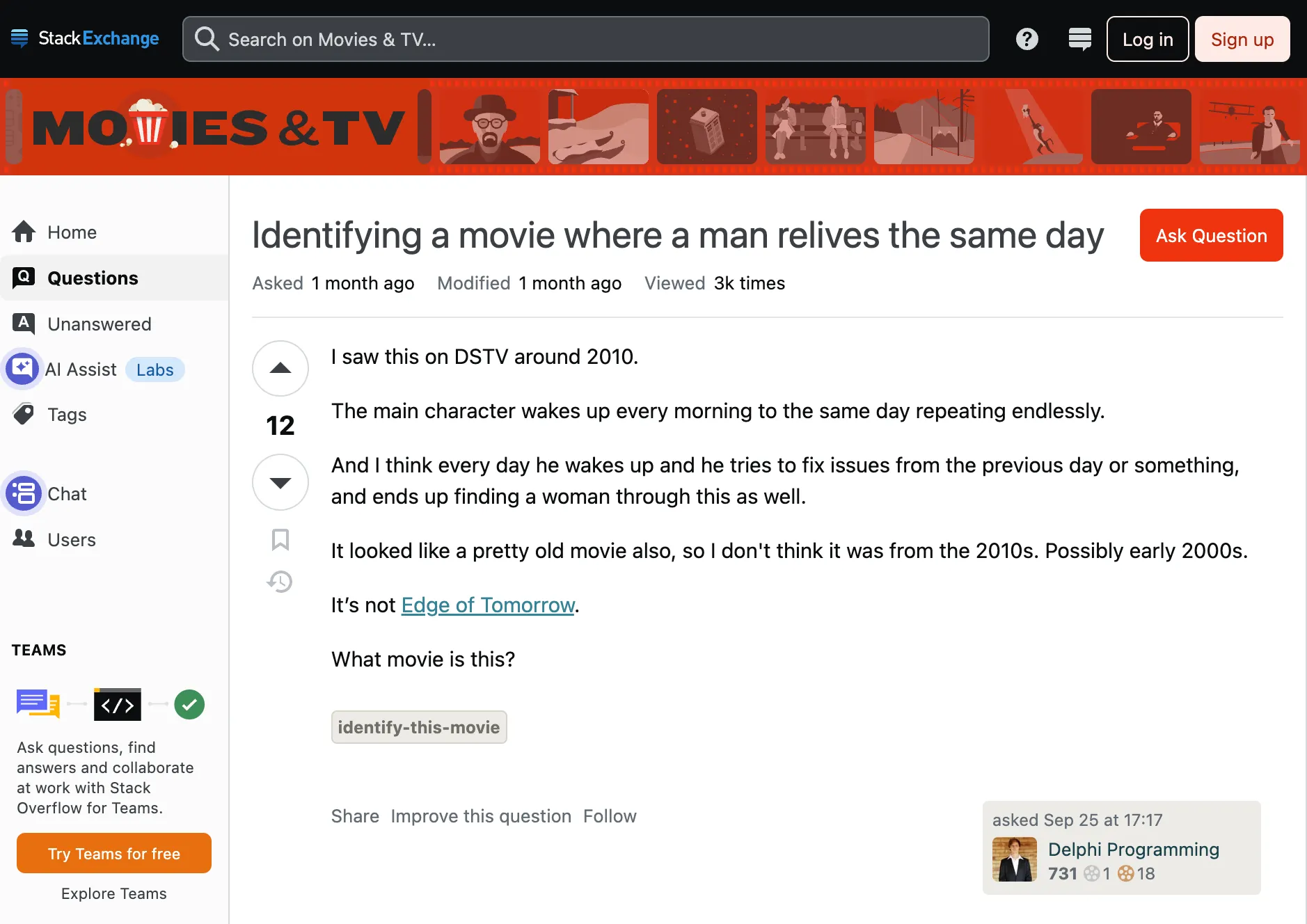

One of my favorite recent questions on Stack Exchange was this one:

An identify-this-movie question on Stack Exchange asking about a movie where someone repeats the same day over and over again.

The user is not from the United States and is fairly young, so they didn’t recognize the 1993 cult classic movie Groundhog Day with Bill Murray.

This kind of question shows up a lot, and there is a whole community of people who try to answer them with diligent, meticulous research. The thing is, questions like that are surprisingly hard to answer. Traditionally, search engines rely on exact keywords, and LLMs lack the structure needed to ground answers in reality. That loose relationship with objective truth is why many people argue controversially that AI-generated content should not be allowed on Stack Exchange.

However, new advancements in AI technology give users a shortcut to that meticulous research by surfacing grounding information from structured datasets. That’s what Algolia’s new MCP server is shooting for — it turns the LLM from confidently oblivious to a fact-summarizing machine. Instead of returning completely made-up information, with the MCP server making a movie database available, the LLM acts more like your cinephile friend who knows all the movies and can identify them for you easily.

What is a MCP server?

The Model Context Protocol (MCP) defines a universal format for requests and responses between an LLM and some other program. When a model sends a query (“find a movie where a guy repeats the same day”), the MCP server translates that into precise API calls to the underlying system — in this case, an Algolia search index — and returns clean, structured data that the model can use in its reasoning.

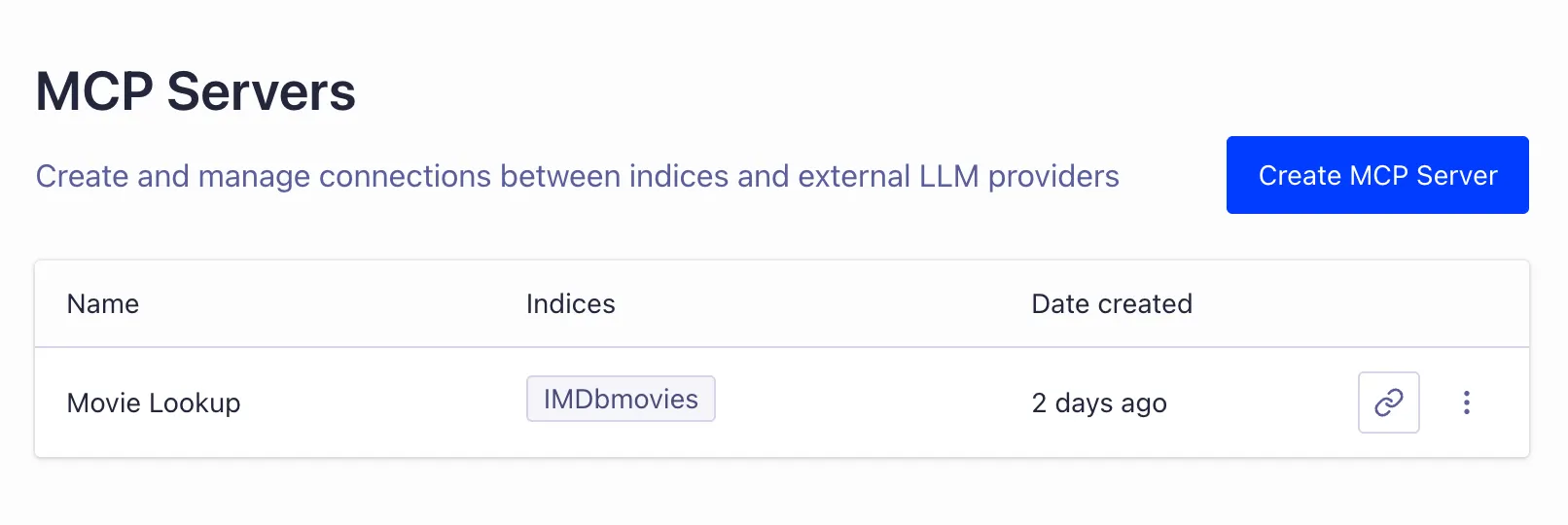

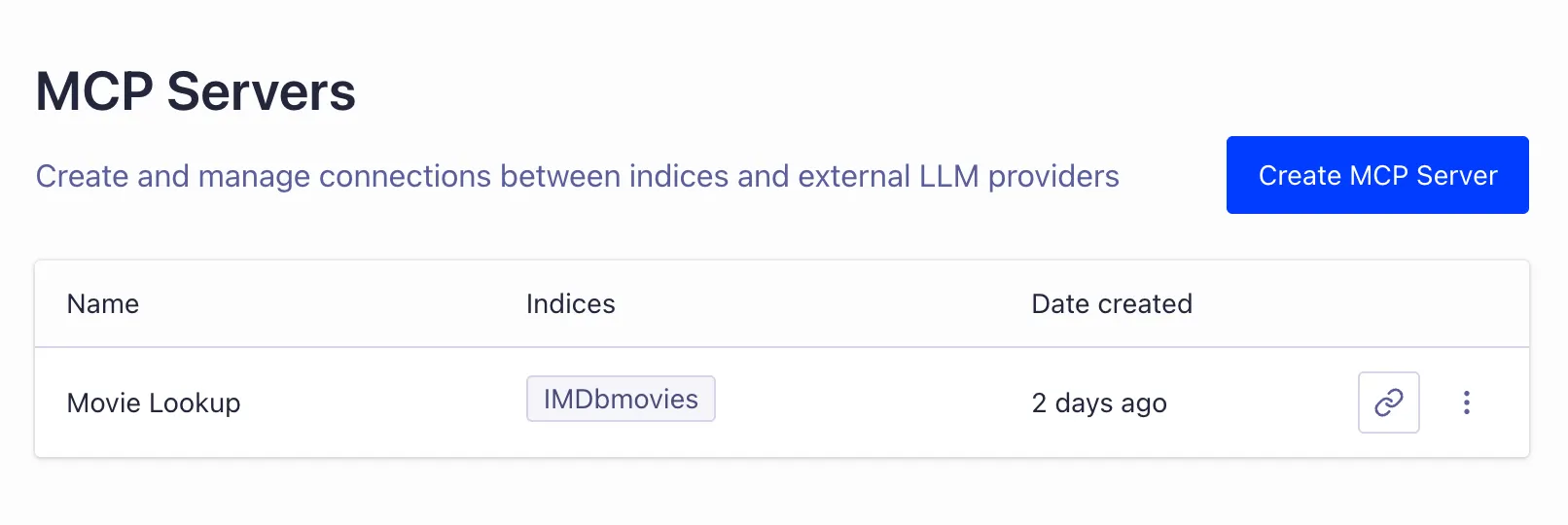

That makes MCP servers direct bridges between natural-language agents and structured search indexes. Instead of writing custom API layers, developers can generate an MCP Server link straight from the Algolia dashboard and securely expose any index to compatible AI systems like ChatGPT or Claude Desktop. Algolia has always been a great place to store your data for retrieval, but the introduction of tools like Agent Studio (our RAG pipeline) and the MCP server expand Algolia into a context layer for AI agents.

What is this useful for?

This industry-wide shift from only enabling user queries to now also fueling agent actions with structured data is a big deal. Why? Because LLMs have historically been somewhat unreliable, which makes many of us wince when they’re given power to affect the world outside of their context window. However, when we ground the LLM in real data, we can trust its actions more. Processes that depend on factual input can now be automated more efficiently and reliably. For example:

Company intranets

Companies often already have structured datasets of important information, like internal documentation, wikis, and support ticket databases. By exposing that information through an Algolia MCP Server, internal AI assistants can securely query live data without custom pipelines or fragile embedding layers. The result is consistent, governed access to verified company knowledge with full traceability and control.

Home assistants

Home assistants are often expected to provide factual information, and even with high stakes (i.e. “Alexa, which medicine was I supposed to take today?”) Using structured datasets like schedules, inventories, or household systems indexed in Algolia, the assistant can retrieve real information and perform context-aware actions like checking supplies and adjusting reminders. For example, imagine asking your home assistant about what you could make for dinner, and instead of coming up with random recipes, it builds your menu based on a search index of what’s in the fridge.

Customer support

Human customer support agents need to be trained to follow the company’s policies, knowing what information to give out when and to who. AI agents need grounding in real data using an MCP server or something similar to match the consistency and trustworthiness of their human counterparts. This also simplifies maintenance, since the staff responsible for drafting company policies and announcements just has to edit the search index through a simple UI rather than opening the Pandora’s box that is the LLM’s system prompt.

Integrated ecommerce agents

Speaking of applications in retail, agents connected to product indexes can answer questions like “Do you have this in red?” or “Would this go with denim?” By querying structured product data through the MCP server, these agents let shoppers discover products conversationally like they would in-store. No manual API calls or coding is needed, since it’s all abstracted away by the MCP server.

Academic research

Researchers can use MCP-connected agents to access structured datasets like academic publication indexes directly from Algolia. This approach streamlines access to complex data collections by running analysis based on factual sources.

Movie identification

And finally, the whole reason everybody wants AI in the first place: to answer the question, “What was that movie called?” That’s definitely the main use case, right? ;)

To do this, I loaded a dataset of IMDB movie info into an Algolia index, including titles, years, and plot summaries. Then, using the Algolia dashboard, I clicked “Generate MCP Server link,” dropped that link into ChatGPT’s Developer Mode as a connector, and the model suddenly had a direct pipeline into my movie database.

Quick side note: This could be accomplished using RAG, and we’ve actually invested a lot into that approach here at Algolia. Our new product Agent Studio aims to make things like this straightforward. However, RAG is meant to be very extensible and flexible, so it would be easier to incorporate into production environments and integrated applications. This MCP server, on the other hand, was far simpler to set up if you’re working with some LLM with a prebuilt UI and MCP support. What MCP lacks in general compatibility, it made up for in speed for a project like this. In fact, only about 5 minutes after I got access to this feature, I had this demo working already and this article was already in progress.

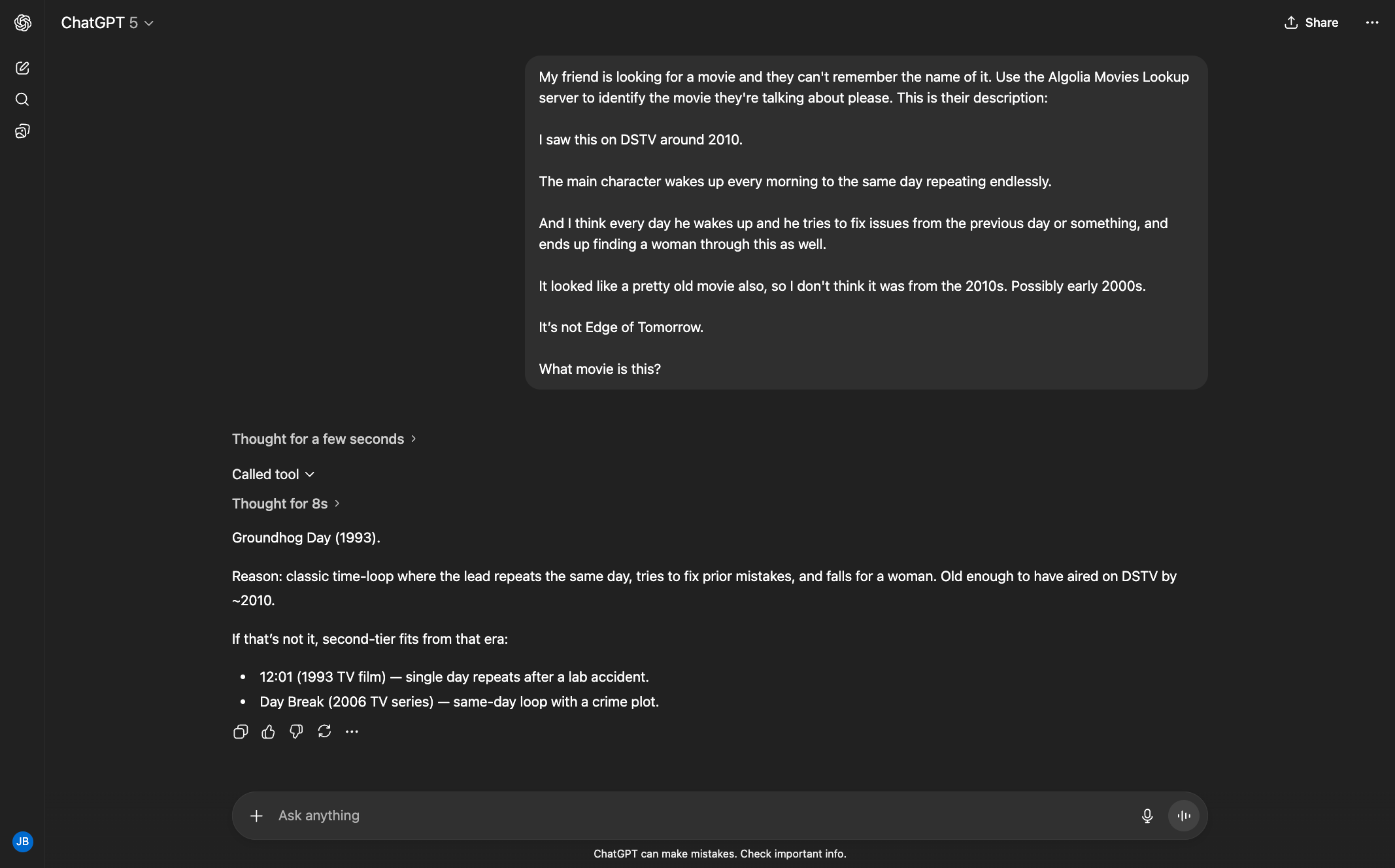

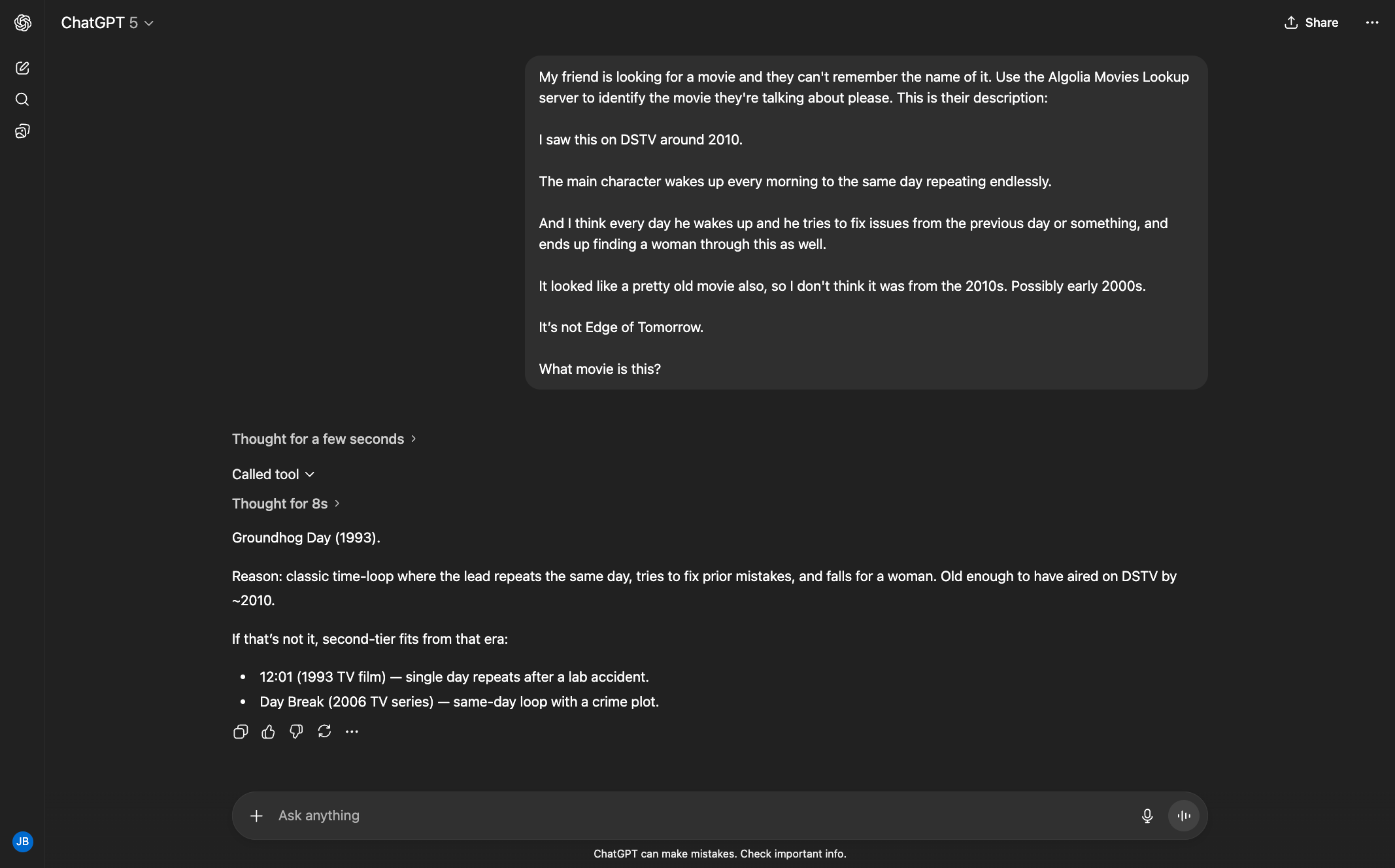

ChatGPT was able to pull data from our movies index and surface answers quickly. For the easy ones, it figured it out on the first try:

But for some others it took more work. We tried to get it to identify a Bollywood movie, and it took a few more steps since the asker didn’t remember a whole lot about the plot. But after a few questions, it successfully identified the movie and even picked out what the person misremembered about it.

This used to take hours of forum sleuthing, but now we can thumb through that data in seconds. This isn’t magic; it’s good data, clean structure, and a standard protocol turning vague human memories into precise, verifiable answers. Of course, I won’t copy and paste these answers verbatim into Stack Exchange, but now I’ve skipped most of the research and can confirm the match by Googling the movie title it returned. Writing the answer myself should now be easy, since we can ask the LLM to remind us of pertinent details, like the reasons for the match and secondary options.

What’s the takeaway from this experiment? AI doesn’t need to replace curiosity — it just needs better footing in reality. If you want to see how an Algolia MCP server changes your own workflows, just open your Algolia dashboard, generate an MCP link in the Generative AI section, and let your favorite LLM start talking to your data.