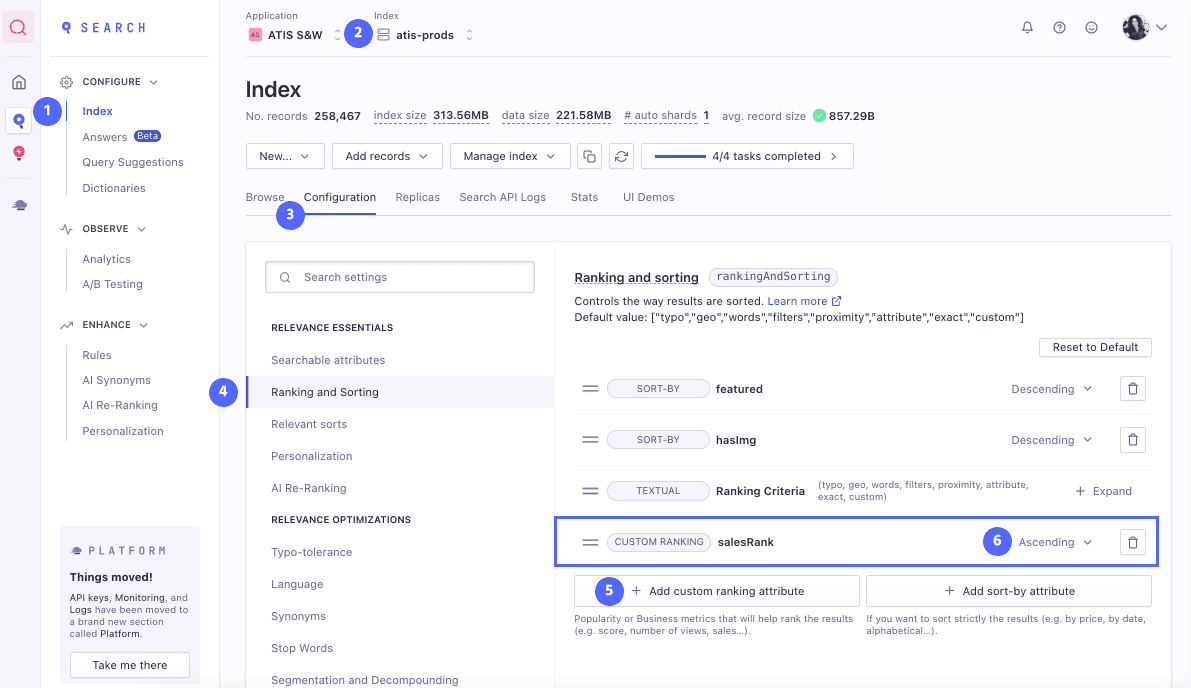

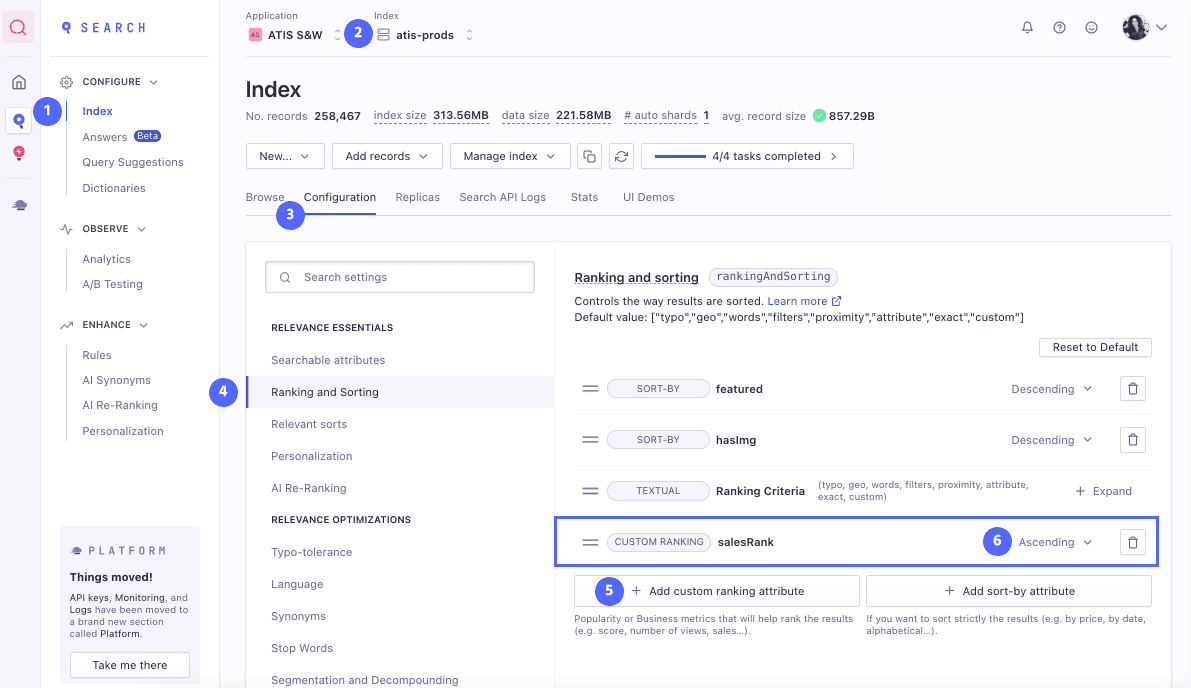

Step 1: Add a selected custom attribute (for example, salesRank attribute) to your main catalog

index (this is index A in your A/B test)

For example: numberOfLikes attribute, will allow sorting the search results based on the “likes” data

that was gathered from site users. Alternatively, salesRank attribute will allow sorting search results based on

the internally gathered sales data.

For step by step instructions refer to the guide “Configuring Custom Ranking for Business Relevance”

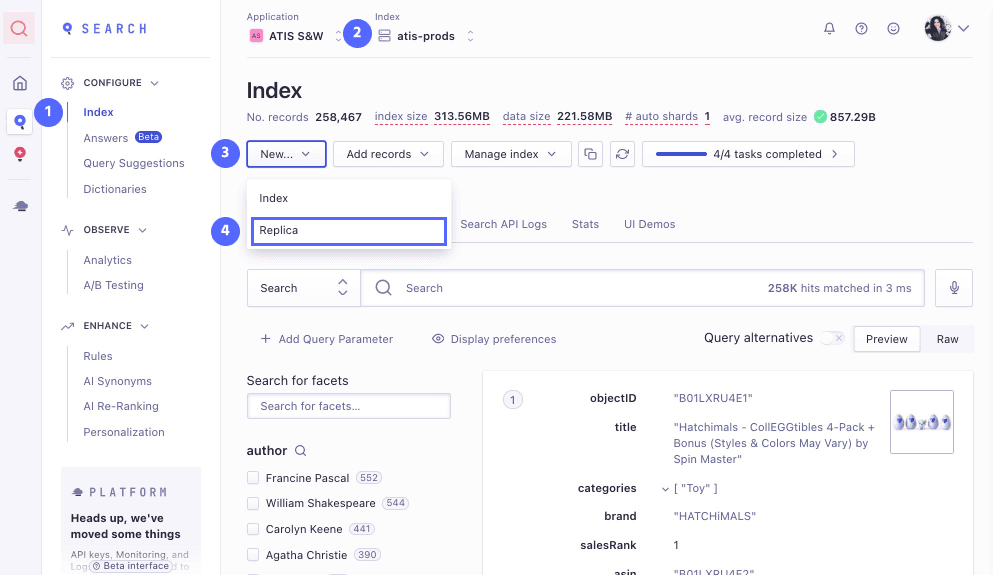

Step 2: Create index B as a replica of A.

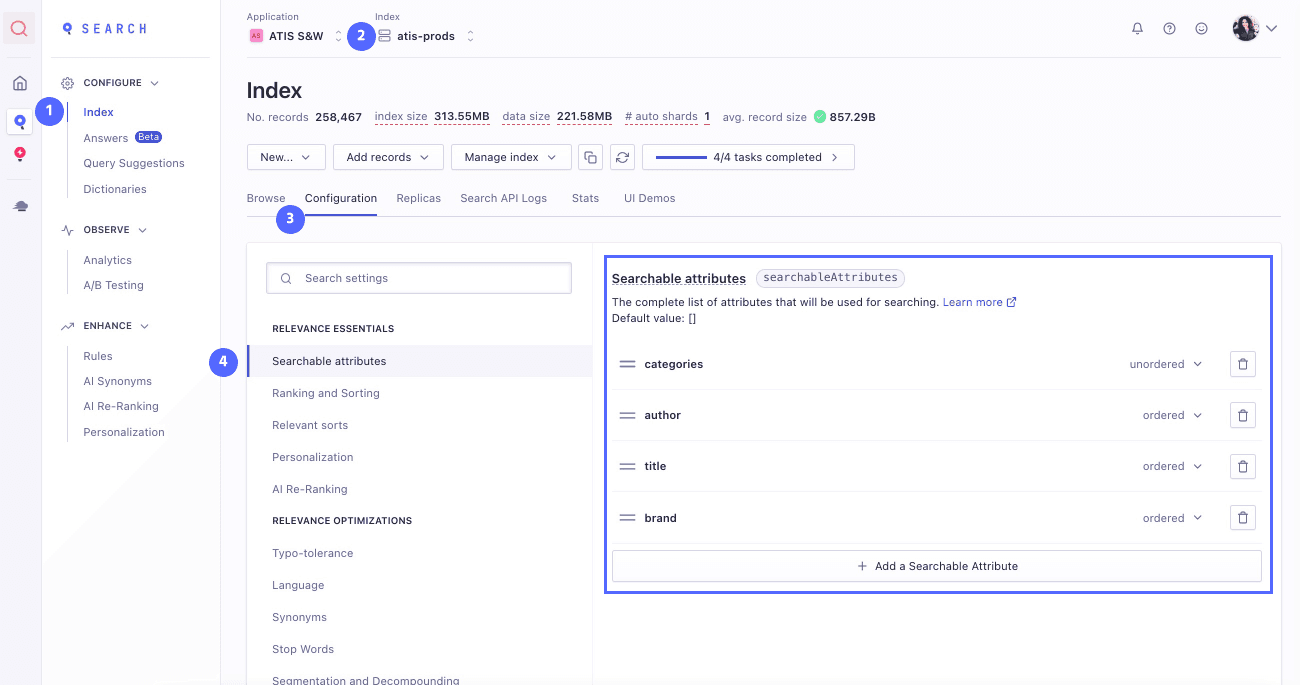

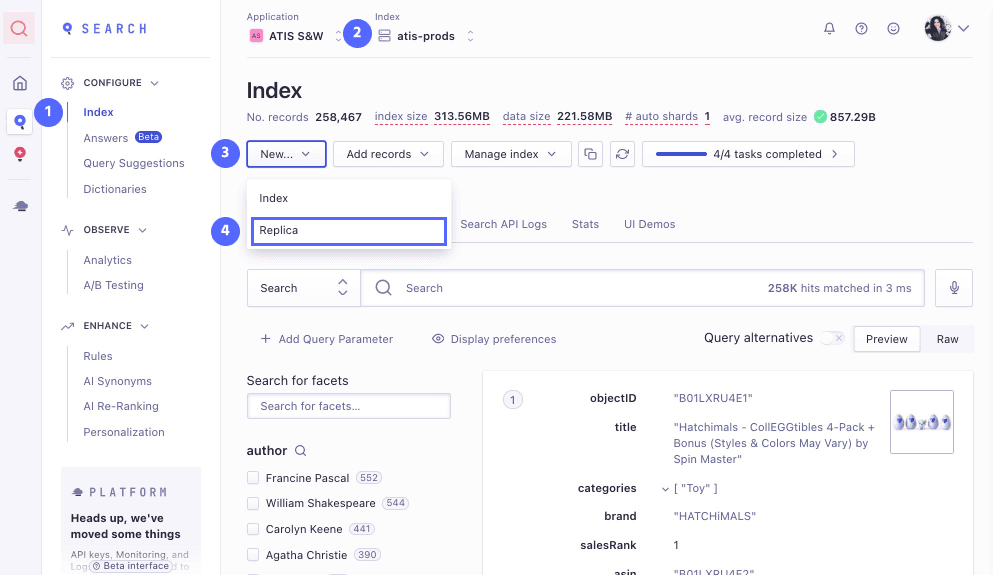

1. Load the dashboard

section “Index”

2. Select the correct index

3. In the top left section click on the “New” button

4. In the drop down menu select “Replica”

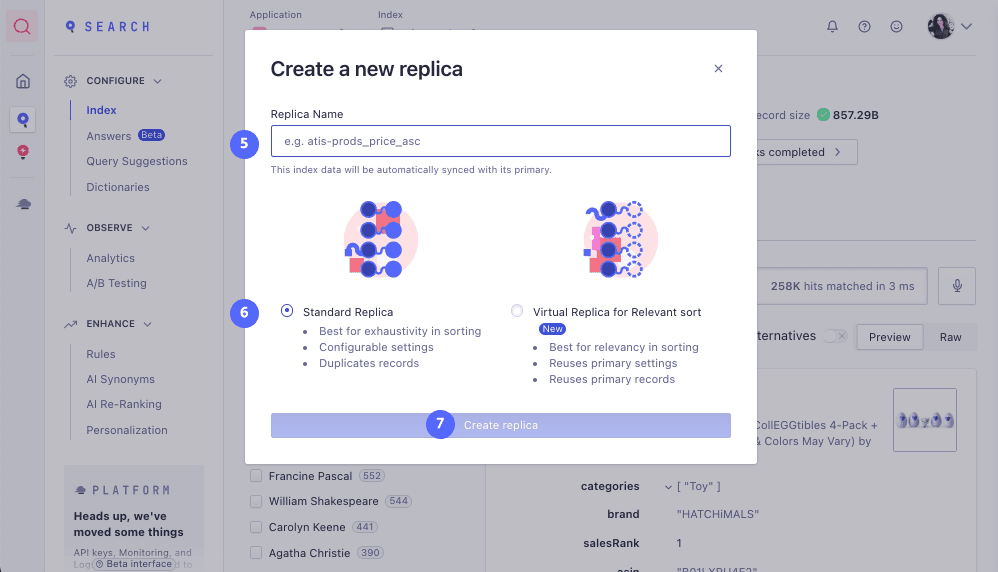

-

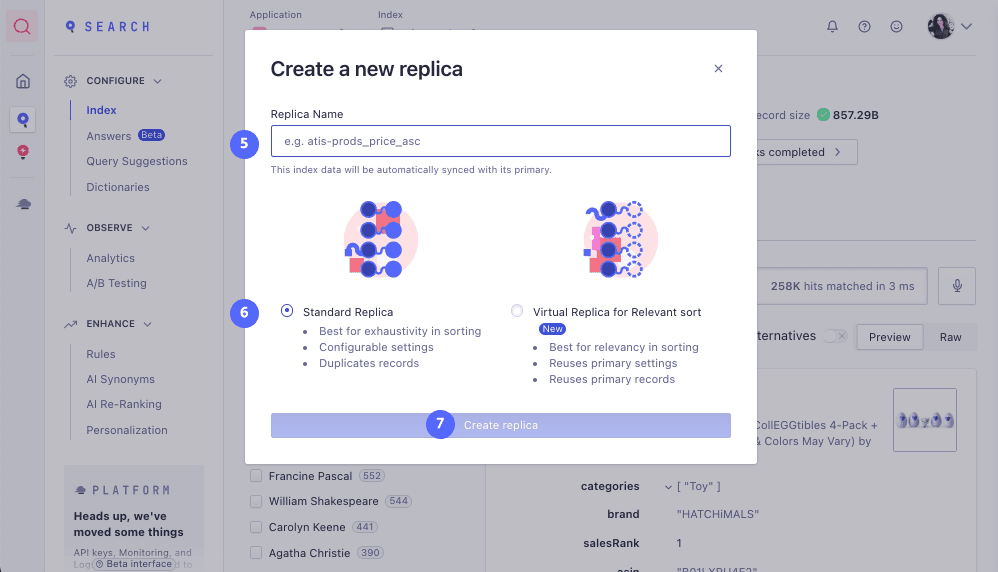

In the pop-up window “Create a new replica” type in the replica name (Index B)

-

Select the the type: “standard” or “virtual”

-

Review before saving the replica index

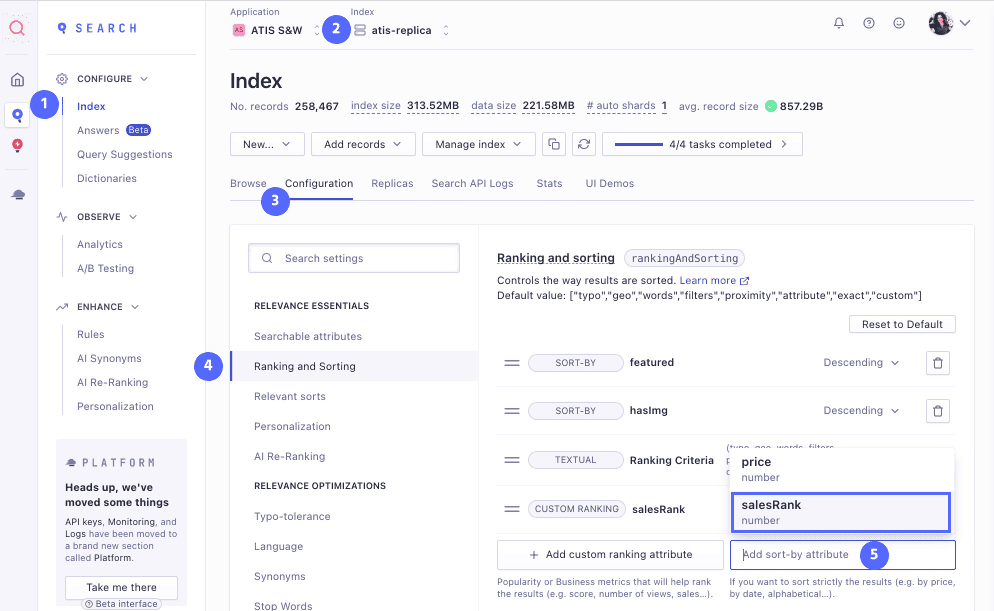

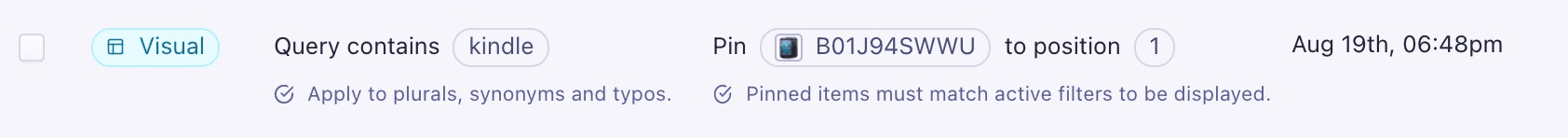

Step 3: Adjust index B’s settings by sorting it’s records based on the selected

custom attribute (for example, salesRank attribute)

-

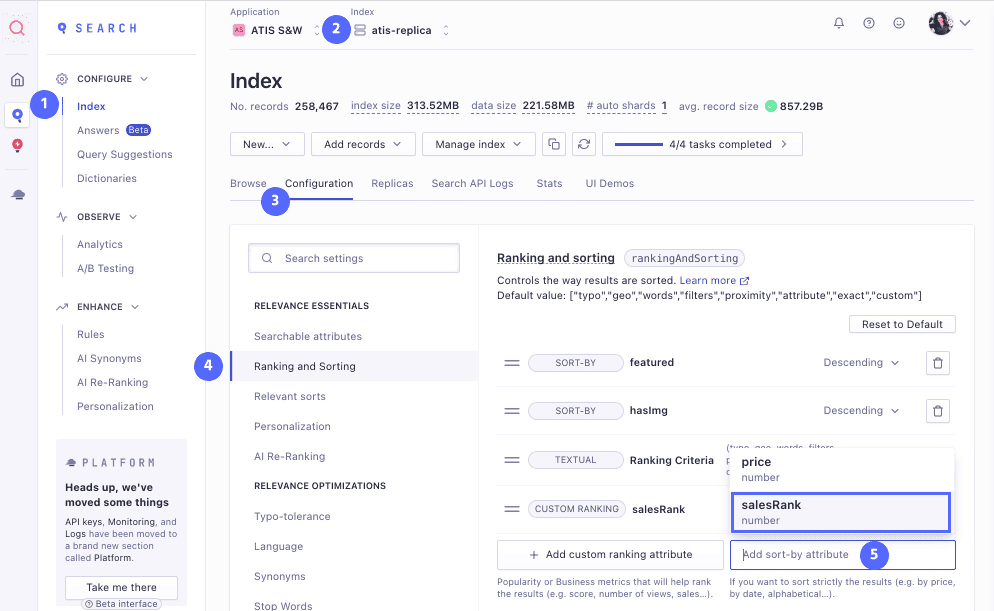

Load the dashboard

section “Index”

-

Verify the correct replica index is selected

-

In the top navigation menu select “Configuration” tab

-

Click on “Ranking and Sorting”

-

Click on the button “Add sort-by attribute” and select the relevant attribute. (For example:

salesRank)

-

Note: the ranking attributes need to be pre-configured by the data engineering team.

-

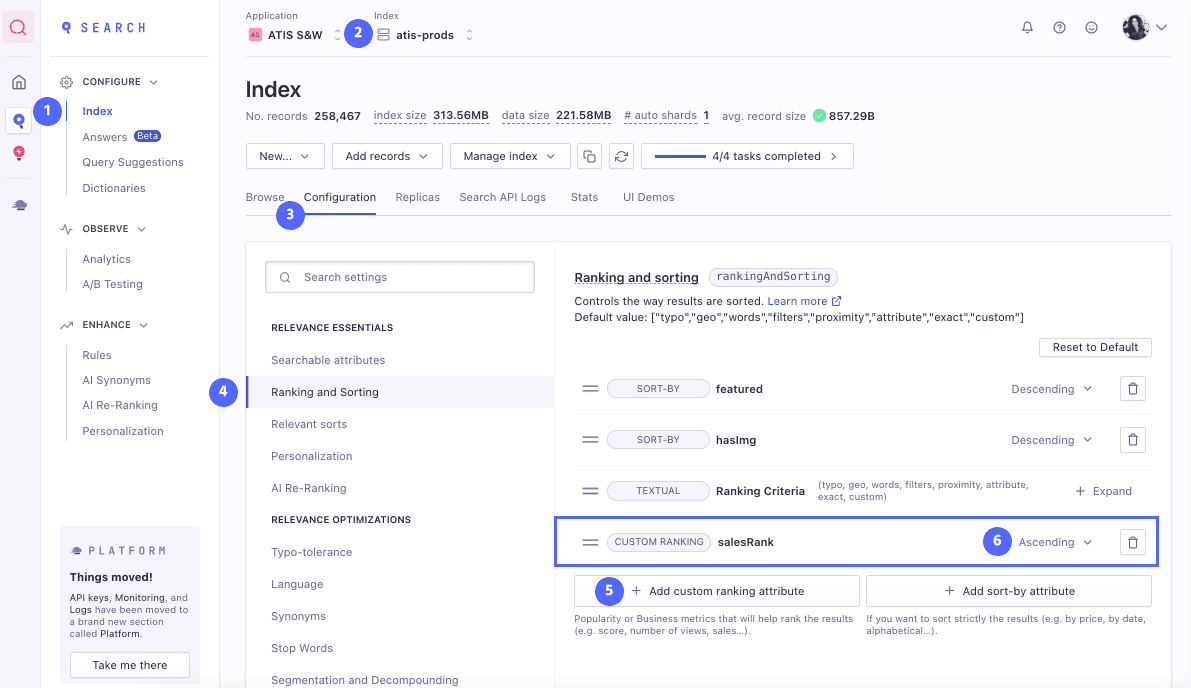

Once you add a sort-by attribute, it will appear on the top of the “Ranking and sorting” list.

Select the desired order for this attribute: ascending or descending and save your changes.

-

Note: While each ranking and sorting attribute’s position can be changed by dragging and dropping

it higher or lower on the list, it is not recommended to do so.

-

Review the changes before saving

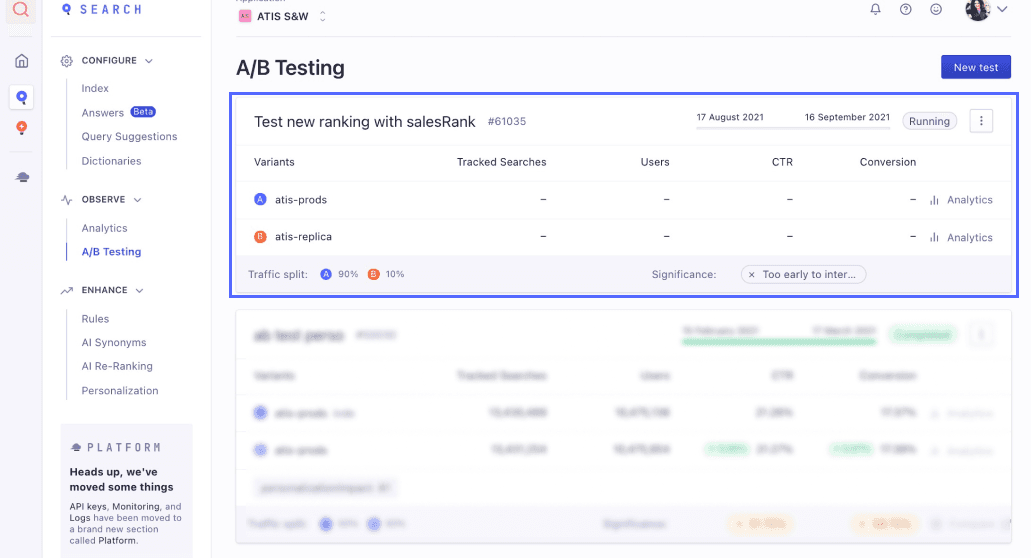

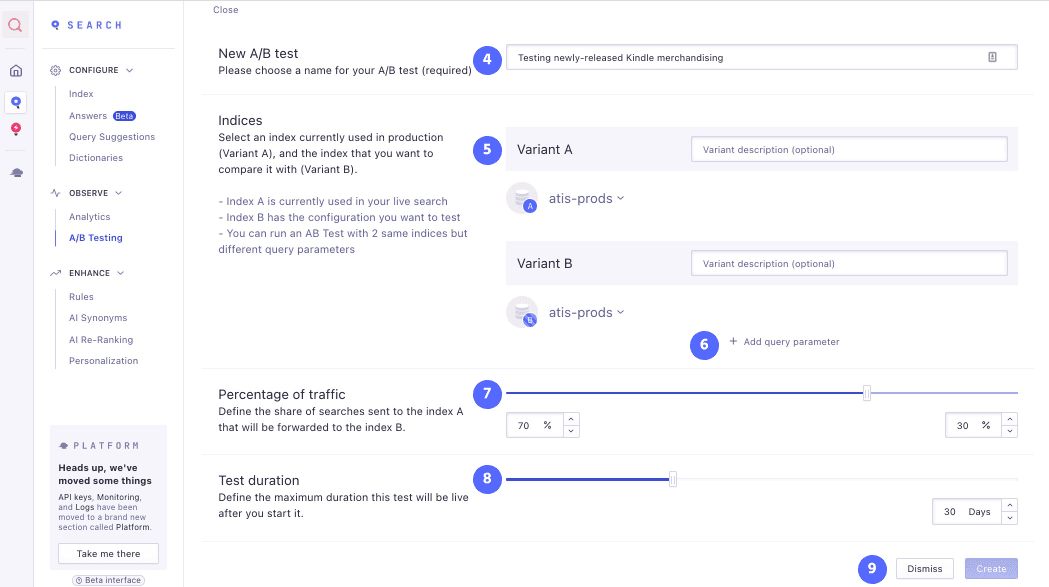

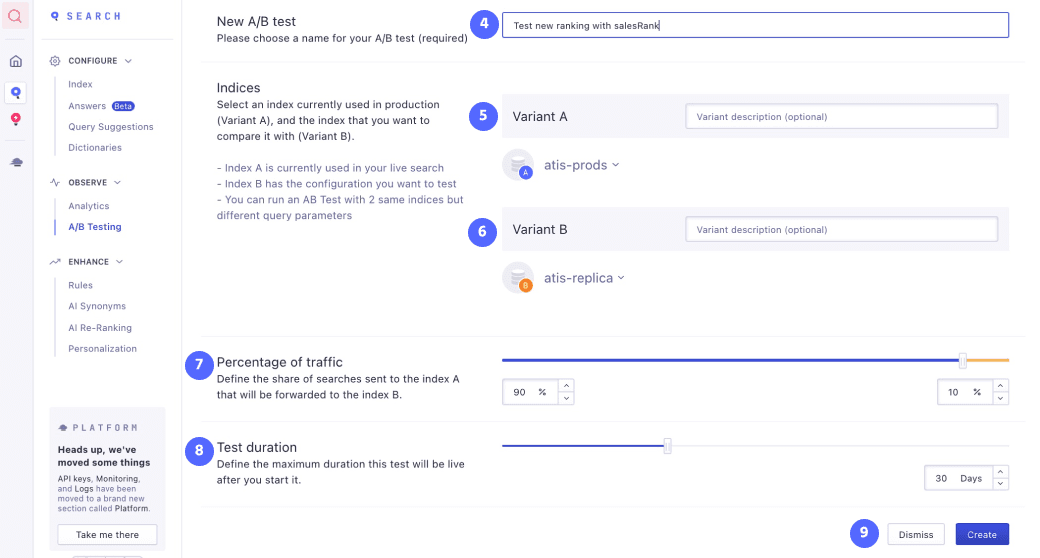

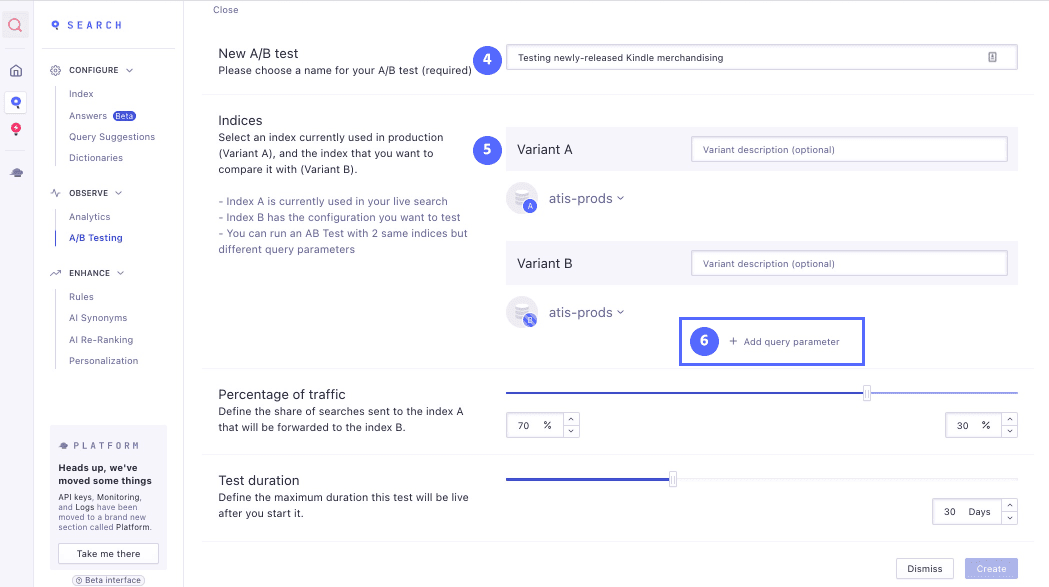

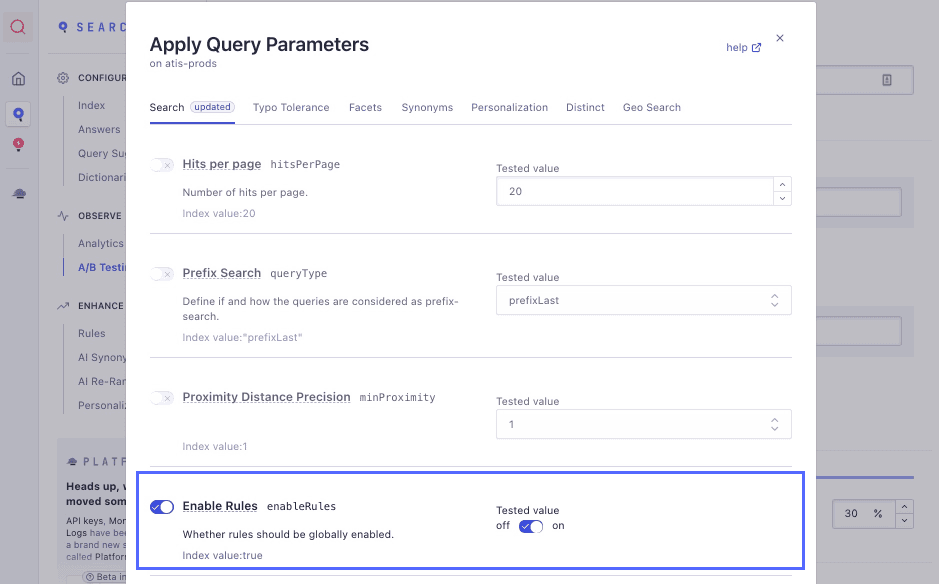

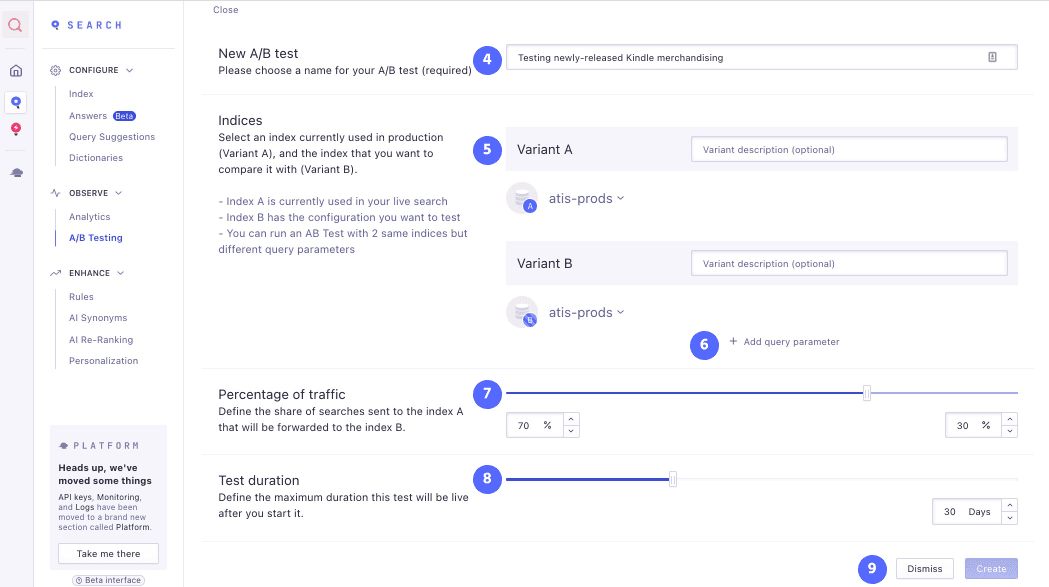

Step 4: Create an A/B test

Tips for creating A/B test:

- Assign your test a self explanatory and meaningful name. For example: “Test new ranking with

salesRank”.

- Let this test run for 30 days, to be sure to get enough data and a good variety of searches, so you set the

date parameters accordingly.

- In most cases it’s fine to set the test to use a 50/50 split. If you are concerned that the new

version might have a negative impact you can show it to a smaller percentage of customers. The trade off

here is that a smaller sample means your test will take longer to reach statistical significance. This is

particularly true if you don’t have a huge volume of visitors to your site.

- When your test reaches 95% confidence or greater, you can see whether your change improves your search, and

whether the improvement is large enough to justify implementational cost.

-

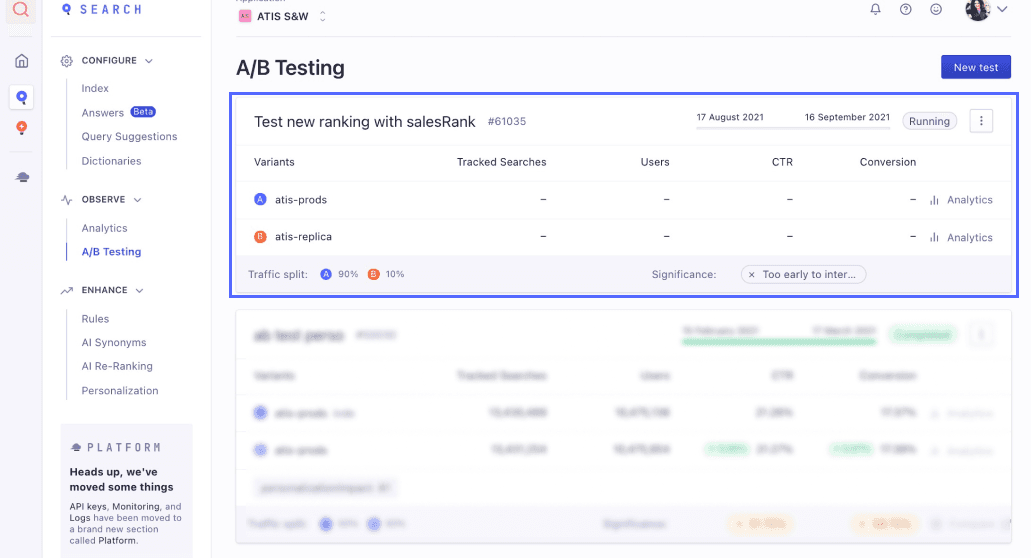

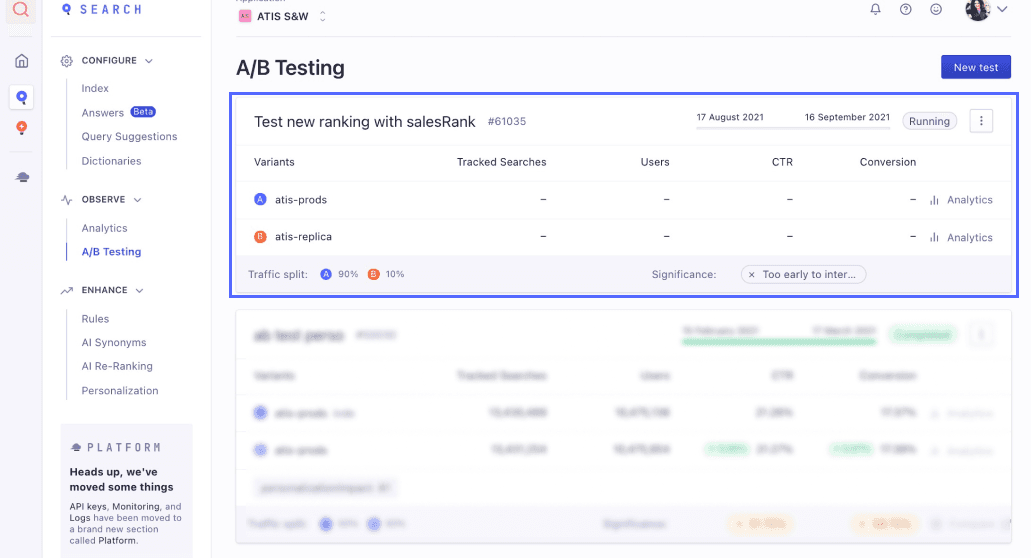

Load the dashboard section “A/B

testing”

-

Verify the correct application is selected

-

In the top right section click on the “New test” button

-

Select an informative and self explanatory name for the test

-

Select “Variant A” - an index currently used for the live search

-

Select “Variant B” - a replica of index chosen as a variant A with the configuration changes

applied to it that you would like to test.

-

Define the percentage of traffic that will be split and directed to each variant

-

Set a test duration. The recommended minimum duration is 30 days.

-

Review the changes before saving

-

The new A/B test will appear on the A/B testing overview section of the dashboard, where you can view and

analyse it once the user data will start aggregating